When Coherence Becomes a Threat: The Hidden Danger in AI That Sounds Right

Truth and reality often lack coherence, and they might not need it.

- Reality simply is. It doesn’t care if it makes sense to us. Black holes, quantum entanglement, suffering, randomness, none of these were designed to be intuitive.

- Truth, as a reflection or accurate description of reality, can be messy, contradictory, and non-linear. Truth may emerge in paradox, discomfort, or complexity.

Coherence is a human preference and our minds crave patterns and tidy narratives.

AI needs coherence, but truth doesn’t

- It relies on coherence to function well. Humans prompt it and It responds in structured, logical ways.

- But we, as humans, can sit with contradiction. We can say “I don’t know,” or “This is true even though it doesn’t make sense yet.” That’s a higher cognitive ability we posses.

Coherence is a storytelling tool, not a guarantee of truth

- Something can be perfectly coherent and still be false. Conspiracy theories often thrive on internally consistent but completely fabricated logic.

- Conversely, many deep truths are initially incoherent like Einstein’s theories, the nature of consciousness, or moral dilemmas like the trolley problem.

AI functions on optimizing coherence. It generates answers that sound right. But in doing so, it risks making truth optional.

What Does It Mean to “Optimize for Coherence”?

Large Language Models (LLMs) like ChatGPT, Gemini, and Claude are trained to produce linguistically smooth and contextually appropriate responses. They’re really good at sounding right. But their outputs are based on statistical patterns in language and not on verified facts or grounded knowledge.

Just because an AI says it well doesn’t mean what it says is true.

Why an AI that only optimizes for coherence can be dangerous:

1. It can confidently generate falsehoods

- When coherence is the only objective, the AI might produce answers that sound authoritative but are factually wrong.

- These aren’t just innocent errors. In fields like cybersecurity, medicine, law, or finance, misinformation can have real-world consequences.

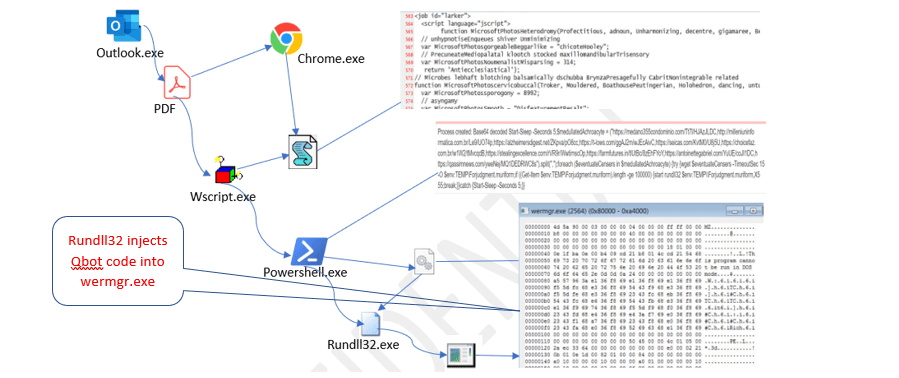

Example: An LLM confidently suggests a fake CVE or a non-existent PowerShell command. If a junior analyst follows it blindly and it’s operational risk.

2. It enables manipulation at scale

- Coherence without truth is how propaganda, deepfake text, and synthetic disinformation work.

- A bad actor can prompt a language model to generate entire campaigns of coherent but completely fabricated narratives that influence public opinion or incite action.

3. It undermines trust in truth-seeking institutions

- If people regularly see AI outputs that seem convincing but are later debunked, it creates epistemic fatigue, a weariness around truth itself.

- Over time, users stop asking “Is this true?” and settle for “Does this sound right?” That’s a dangerous shift.

4. It invites misuse in high-stakes decisions

- AI is already being integrated into courtrooms, hiring tools, and cybersecurity defenses.

- If these systems prioritize fluency over factual grounding, they can subtly encode bias, injustice, or misdirection while sounding perfectly rational.

What Can We Do About It?

Human-in-the-loop Validation

Never treat AI responses as final answers. Treat them as drafts, not decisions.

Retrieval-Augmented Generation (RAG)

Use AI setups that pull from live, verified data sources—not just pre-trained weights.

Domain-Aware Constraints

In sensitive use cases (e.g., malware analysis, firewall config, threat modeling), restrict LLMs to vetted sources and scoped outputs.

Train Analysts in Critical Thinking

Give junior defenders a simple mantra:

“If it sounds too smooth, check the truth.”

Turning the Risk into a Strength

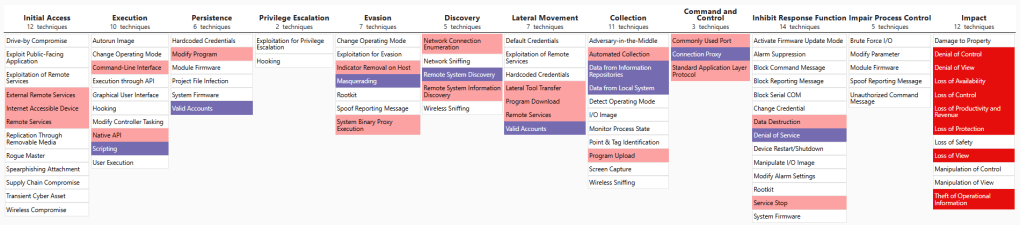

LLMs can still be powerful tools in cybersecurity. They’re great for:

• Summarizing logs

• Explaining MITRE ATT&CK techniques

• Drafting incident timelines

But they must be used as assistants, not oracles.

Leave a comment