Explore the evolving attack surfaces and defense strategies for securing Large Language Models (LLMs) and GenAI systems.

Introduction

This blog series is crafted for cybersecurity professionals, AI architects, and technology learners who want to understand how to analyze, attack, and protect LLM-powered environments.

Each post is based on real-world use cases, practical labs, and insights from our full-length Udemy course on LLM Cybersecurity. Whether you’re exploring for the first time or want to enhance your AI security expertise — this series will guide you.

Table of Contents: Blog Series

1. What Are Large Language Models (LLMs)? A Beginner’s Guide to GenAI

In this Part 1, We will start with the basics: understanding what LLMs are, how they work, and how they differ from broader GenAI tools. This part will also cover the evolution of Transformers and key risks.

2. Anatomy of an LLM System: Dissecting the Layers

In Part 2, We will look at the technical breakdown of the architecture behind LLM systems: application layer, LLM processing unit, models, data stores, and external APIs.

3. LLM Attack Surfaces: How Risks Expand With Scale

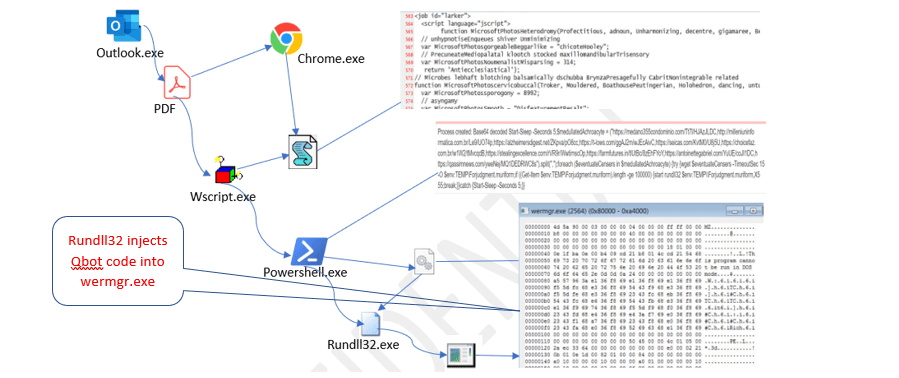

In this Part 3, we will explore how integration, public exposure, and LLM-specific behaviors like hallucination or prompt injection create a new security landscape.

4. Securing OLAMA Servers — Why They’re Exposed and How to Protect Them

In Part 4, of this series, we will focus on a specific real-world scenario involving OLAMA server exposures. We will examine why so many OLAMA servers are accessible over the public Internet without authentication and how to implement basic security controls to mitigate this exposure.

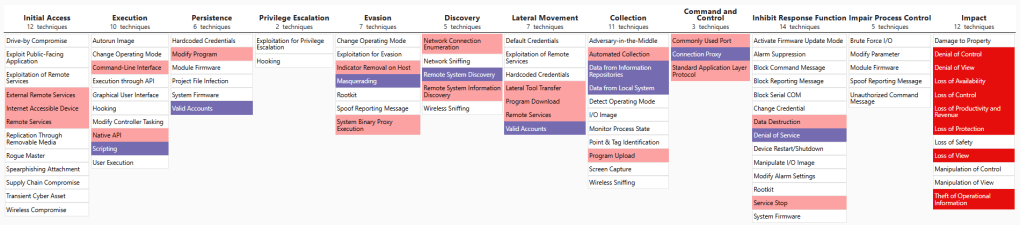

5. MITRE ATLAS for AI Security: Understanding the Framework with a Case Study

In Part 5, we will shift our focus to MITRE Atlas, a framework specifically designed for AI cybersecurity. We will also dive into a real-world case study involving OpenAI vs. DeepSeek.

Leave a comment